Agility

Context: We have a goal requiring creative effort. We want to succeed.

Overplanning increases risk …

When embarking on a creative project, success seems certain. We plan optimistically, and then almost immediately after we start, delays and challenges emerge. The plan and likely outcome keep diverging. We become more realistic. We double down on effort. We plan with more detail, but encounter even more problems.

Forces

Scarce resources—specialists, money, equipment, facilities—often need to be reserved. If we can’t plan and reserve resources for when we need them, we may waste effort or capital.

But innovation is naturally unpredictable. How can we guarantee market demand, material costs, employee skills, market perception, legal constraints, etc. when there are so many dependencies? In fact, many projects count on technical or market uncertainty to gain a lead: if others fear to tread, we might face little competition.

Time is our enemy, because opportunities decay with time. Seizing an opportunity before it disappears may require extraordinary effort. We can produce brief bursts of creative work and long slogs of mechanical work, but may not be able to sustain a long slog of creativity.

Risks become reality as the project continues. To reduce planning time, we often construct an idealistic plan that assumes we have sufficient staff, budget, material, space, legal standing, authorization, etc. to proceed uninterrupted. If we reflect on our optimistic approach, we can be almost certain the project will take longer. Because the project plan doesn’t explicitly incorporate all the risks, or because plans with contingencies complicate decision making, we often just start working, hoping that if risks become real we will find a way to cope.

Our optimism and eagerness to please can bias our time and cost estimates too low, and our market and value estimates too high. We often have data from previous projects that can correct the bias, but full transparency can reveal late, expensive and unrewarded efforts—this can lead to punishment of the truthful. And so the truth, despite its value, often remain hidden.

Starting work, recording estimates and tracking results can improve our predictions after completion. The ratio of results to estimates are multipliers that correct our bias in later work. However, if we are not exercising all the elements of our project—initiation, purchasing, assembly, marketing, order processing, delivery and returns—our multiplier doesn’t incorporate all of our biases. What if there are legal or packaging challenges in delivery, for example, or lots of returns?

Detailed contracts with fixed bids seem like a great way to get the best value for the least cost. However, contracts bind may be suboptimal. In new product development, customer certainty is unlikely; most features customers claim to need are barely used and once customers experience the product they will likely want changes. The provider could have made bad estimates, driving them to abandonment or bankruptcy. A huge list of over budget and abandoned projects shows that the detailed contract/fixed bid approach to projects can easily lead to ruin (Charette 2005).

Similarly, our market and value estimates are often too high. It is trivial to find example of projects that didn’t realize their promoted value: publicly traded companies, venture-backed startups, and crowdfunded products experience high rates of failure. Sadly, many of those projects spent all the money allocated (or more) before calling it quits.

… therefore, rapidly measure, experiment, learn, and adapt

Before embarking on a project, determine what new knowledge is most important to the project’s success. If we identify our riskiest assumptions and experiment early to reduce those risks, we increase our likelihood of overall success. Sometimes, we discover that a risk is actually a terrible reality; in this case, we can reconsider our goal before wasting time and money on a dud.

Here are some examples for different fields.

Lean Startup

Managers may not know a proposed product’s realistic market size or value. If we our value assumptions explicit, then test our assumptions early, we can improve the value of our work or avoid wasting money. A technique called Lean Startup exploits this principle. Lean Startup techniques discover value. Early in product investment, it isn’t feasible to directly measure whether people will buy a product without returning it. Instead, we must find feasible, “low fidelity” metrics that can help us measure market size and price sensitivity. Lean Startup theorizes market sizes in these low fidelity metrics, then conducts iterative experiments to gain greater market knowledge. The mantra of Lean Startup is “Build, Measure, Learn,” where the “Build” part is extremely low-cost.

Scrum

Managers may not know a proposed product’s development or design cost. If we make our development assumptions and practices explicit, then test our assumptions and practices cheaply, we can focus our development on work that leads to value for the least effort. Agile development techniques, such as Scrum, exploit this principle. Scrum investigates cost and allows those who understand value to redirect activities to higher value, lower cost work. When we start development of a new product, we have little to help us predict completion time and cost. When we expect a customer has completely specified the outcome, as we have experienced in academic exercises, we proceed academically: we start working on a single component of the product, finishing it to completion before moving to another component. There are two problems with this approach.

So Scrum first decomposes products into iterations, seeking to produce partial products that have customer utility. Instead of segmenting the product into components, and developing each component to completion, Scrum identifies the most important and least expensive end-user features for the next iteration, and completes only the necessary portions of each component to support those features. Developers seek to produce enough work to deliver value, and so development must then include testing, packaging, delivery and customer support. Through these means, developers get rapid feedback on value, and can reprioritize features, quality and all other product characteristics to maximize customer value in minimum time and cost.

Lean Manufacturing

Managers may not know how to most rapidly manufacture a high quality product. If we make manufacturing assumptions and practices explicit, then test our assumptions and practices, we can refine our manufacturing to reduce time-to-market, increase quality and reduce returns. Lean manufacturing techniques, such as Toyota Production System, exploit this principle.

Agility

I lump these and other related techniques, such as DevOps (rapid deployment), Getting Things Done (managing personal goals), Design Thinking (designing for customer delight), etc. into one bucket called Agility. Agility is exactly as it sounds: a philosophy that hones our ability to turn on a dime, whether we are exploring a market, designing a product, manufacturing a machine or managing our own time. All Agility techniques focus on measurement, experimentation, and adaptation. Experts in one type of Agility are typically interested in the other fields. They all face similar challenges—e.g., iteration overhead can limit how rapidly we learn, generalized skills for rapid adaptation can reduce field-specific excellence, the metrics experiments use to improve organizational results can destabilize individual careers—and the solutions are similar.

This work considers Agility techniques as a combined whole, and decomposes them into common patterns. Each pattern describes a problem in context and the forces that constrain a reasonable solution. Only after describing a problem will this work discuss a solution. This approach helps you match a situation to a solution, . Every project is different, but if you know a bunch of great patterns, you can succeed in many different types of situations.

This effort began when I tried to analyze the many agile development techniques, their justification, and their relationship to each other. By manually clustering together practices from Scrum and XP, I could see what common characteristics they had. I decided to try folding in Lean Startup practices, Getting Things Done practices and Lean Manufacturing. I realized how much every field borrowed from others.

As I read articles about agile practices, I grew frustrated that few described the problems agile was designed to fix. Such descriptions of rituals without motivation rightly caused critics to compare agile with religious zealotry. Growingly, my agile training efforts included historical reviews of the industry disasters that let to agile popularity. This also led me to use formal patterns, which explicitly incorporate detailed justification (Alexander 1979).

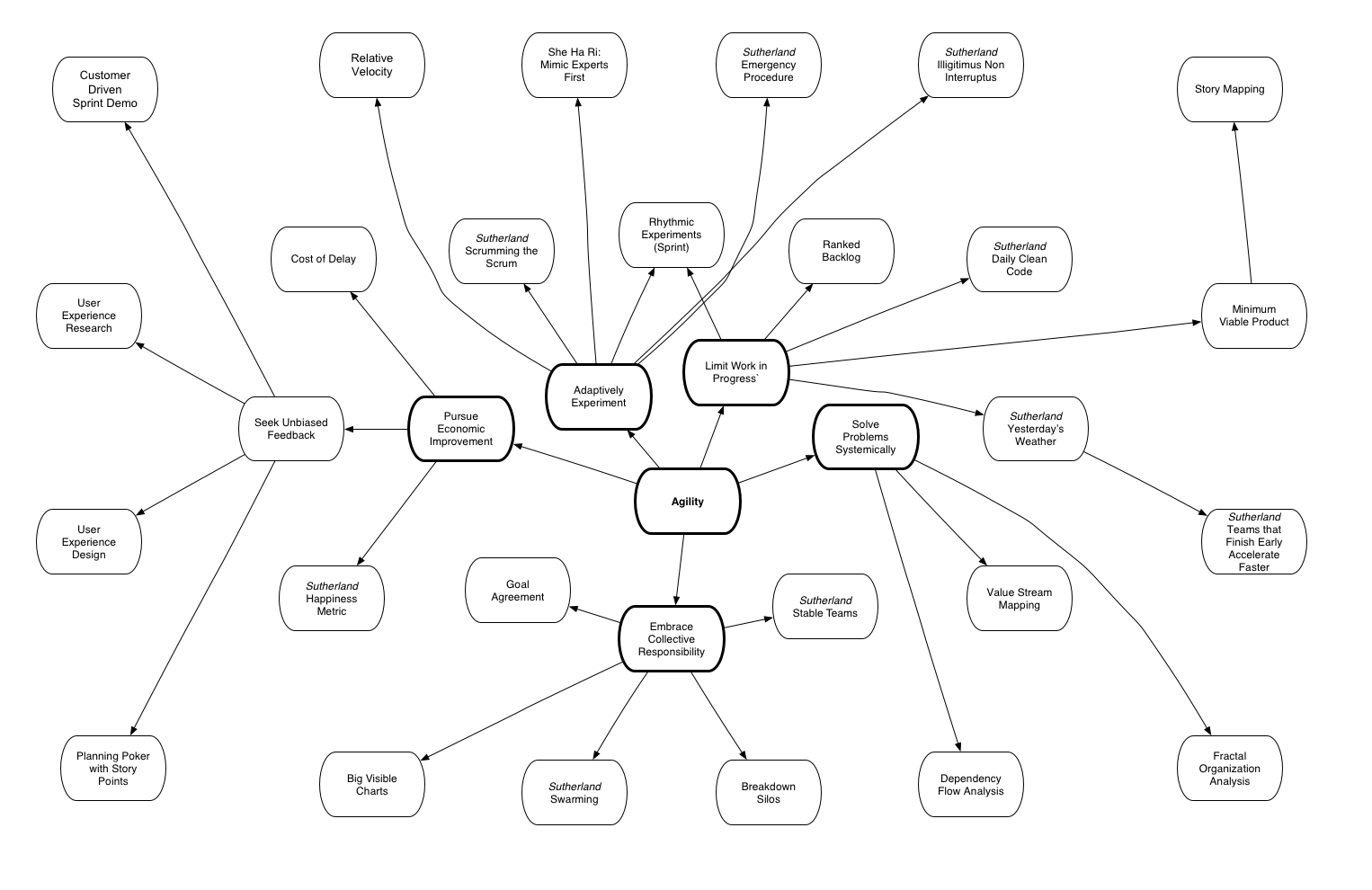

A pattern language is a system of patterns that interoperate to compose an entire field. Most pattern languages start with a root pattern. This is the root pattern of the Agility pattern language.

Examples

Cars Direct

In 1998, before the term “Lean Startup” was coined, Bill Gross of ideaLab, a startup incubator, tried to buy a car online. It was incredibly frustrating. He wondered whether he could make it much easier. He theorized that people might want to specify the car they wanted, plunk down a thousand dollar deposit, and pay the balance when taking delivery of the vehicle at home or work. Everyone at his incubator disagreed, arguing that people were scared to use their credit cards online.

Gross was the head of the company, and thought there would be some users who would try it. He asked Scott Painter to join and create a website to sell cars. A month after joining, Painter was still forming the company as a legal entity. Gross said, “Stop doing that, we don’t even know if the idea makes sense. Just build the website.” Two weeks later, Painter was talking to Honda, Toyota and other companies about how to get the cars when people wanted them. “Forget that too, Scott,” Gross said, “We don’t even know if people will buy cars online.” Two weeks later, Painter showed Gross how the online configurator worked, using drop-downs to choose the options they wanted with all the makes, models and options.

Bill asked him to instead create the simplest possible web site, with a free-form field where a user could specify make, model and options, and fields for the name, address, email and credit card of the person making the order. And then make the site charge people a $1000 deposit. “And then,” Gross said, “if someone does order a car, someone at ideaLab can just go down to the Monrovia Auto Mall, buy the car requested and deliver it to the customer. We’re going to lose money on each car, if anyone even wants one, and we’ll deliver it to their house. So don’t worry about incorrect configurations; we’ll call someone on the phone if that’s a problem. We just want to see if someone would put $1000 on a credit card. We don’t even have a merchant account, so how would we charge them?”

Eleven weeks in, on a Thursday night, Gross convinced Painter to deploy the rudimentary site. They went home. On Friday morning, Gross asked Painter how it went. Painter said, “Well, we sold four cars last night.” And Gross responded, “Well hurry up and turn the site off!” As planned, they went down to Monrovia Auto Mall to buy the cars, and took their customers through the whole buying experienced, learning a lot in the process, losing $2500 per car.

Gross and Painter learned what they needed to learn, which was that people really would, with no advertising, buy cars online. They took the site down, spent six months building a more complete site, brought it live as carsdirect.com, and ultimately sold the company for $1.1 billion (Gross 2014).

Lean Startup was the right choice, because the greatest unknown was whether customers would buy cars online. Gross and Painter knew how to build a website, they knew how to deliver a car, but they didn’t know whether there was a market.

Senior Concierge

One startup company I helped wanted to deliver online services for seniors. Venture capitalist buddies were very interested in medical concierge services, since they were having to manage medical issues for their own aging parents. Since the market size was unknown, but since we thought early adopters likely used Facebook, we created Facebook ad campaigns as if the product had already been developed.

Initially, we chose a low-fidelity measure of market interest: click-through rate on ads to active seniors in key markets. The first campaign emphasized general concierge services and got a 1% click through rate. The second offered two options in what’s called an A/B test: medical concierge services (appointment and drug-taking reminders) vs volunteer and work opportunities. The greatest interest was volunteer and work opportunities, so our third campaign tested whether volunteer or paid opportunities were more interesting. The fourth tested whether our theories could be generalized to multiple markets, and whether people would click further to purchase the product.

Our initial theories were wrong: even though venture capitalists thought there was a big market for medical concierge services, the parents themselves weren’t interested. For an outlay of about $2000 in advertising fees, we made an important discovery: active seniors had a lot of free time, and were looking for opportunities to help society. Had we gone forward without measuring the market size, we would have spent enormous sums developing an expensive calendaring system, with drug reminders and connections to medical services, and likely discovered that people wouldn’t buy it.

Screencasting

In 2007, a team I led developed a cloud-based screencasting software application, where a person could record a computer session. One team member suggested we use Scrum, a technique I’d never encountered. After reading everything I could find about it, we decided to use it. The results were surprising: with a team of just five engineers, we developed client applications that ran on Windows and Mac and a clustered Java-based server system in just a few months. Early iterations tried to develop the clients with a common infrastructure; they worked but were hard to revise and maintain. Because Scrum had us performing early releases, we could cheaply decide to switch to native infrastructures for the clients. In early iterations, we tested our user interface, and we discovered that some interfaces were not intuitive. Because it was early in development, we could switched them at relatively low cost and did.

The system we built was highly resilient; it would continue to run even if a hosting server crashed. We didn’t expect to need this resiliency initially, but scalability was a huge risk identified early and we wanted to test whether we could build a resilient server farm. That decision saved our butts. Our first public demonstration was at a famous new-products conference, streamed live. We didn’t know about the streaming part. Under unexpected load of 1700 users, a host server crashed. The screencasting application quietly switched to a different server, and continued working. Gradually, one after another of our six servers crashed. Our team went rapidly to work identifying bugs, fixing them, and bringing servers back online, all while the conference was taking place. No user was interrupted, and no one but the developers knew this was happening. But behind the scenes there was a lot of human and machine activity. Our team got a reputation for bulletproof software.

This experience transformed me: I became a strong advocate for agile methods and ultimately became a Scrum Alliance Certified Enterprise Coach (a management consultant for agile organizations). I have a PhD in computer science and I have been programming since I was 15; now I was programming humans instead of machines.

Scrum seemed like the right choice for Screencasting, because our product marketers knew there were customers. What we didn’t know was whether we could produce a reliable, cost effective cloud-based Screencasting system. Unfortunately, after completing the application, we discovered the market for screencasting was limited, and the project was cancelled. My disappointment in this outcome led me to explore Lean Startup techniques.

Resulting Context

Organizations that sustain agility must adopt an experimental mindset. They see every project as something to measure and improve. They accept that we all make mistakes, and that the best way to manage projects is such that most mistakes are inexpensive learning opportunities.

There are many difficulties in sustaining agility, unfortunately. When agile practices were emerging, many of us thought agile methods were so obviously superior that organizations would sustain them naturally. This turned out to be wrong. Experimentation that leads to rapid learning has a failure rate approaching 50%, and so experimentation has to be cheap or agile management becomes very costly. Furthermore, releasing products iteratively can also be expensive, and agile organizations may need to redesign how products are tested, packaged and delivered to reduce this cost (if you’ve heard the term “DevOps,” this is one such approach).

Finally, due to their focus on metrics, agile methods can reveal dysfunctions in middle management; if an organization has poor management training, arrogant middle managers, or intolerant executives, this can easily kill the honesty required to sustain agility. Many of the sub-patterns discussed later can help compensate for these problems and help sustain agility.

Related Patterns

This is the lead pattern for the Agility pattern language. Four sub-patterns—Align to a Driving Purpose, Measure Economic Progress, Proactively Experiment to Improve, Limit Work in Progress—provide details to implement simple agility, whether you are seeking higher value (such as with Lean Startup) or lower cost (such as with Scrum or Lean Manufacturing). To sustain agility, two additional sub-patterns—Embrace Collective Responsibility and Systems Thinking—complete the picture.

Many additional patterns arise as sub-sub-patterns. However, this pattern and the six sub-patterns are important enough to get a designation of their own: the Agile Base Patterns.

References

Acknowledgements

[I am seeking reviewers. Please contact me through the contact link. if you would like to trade detailed reviews for a complementary copy of the final work.]

Author

Dan Greening